Sights & Sounds of Memory Lane

Parsons BFA Thesis

Sights & Sounds of Memory Lane

Parsons BFA Thesis

Timeframe

6 months

Tools

Processing + Minim Library, HTML, CSS, JavaScript

Description

“Sights and Sounds of Memory Lane” explores the intersection of memory and audio through creative coding and generative design.

By visualizing the auditory elements that are attached to memories, a new way of experiencing and remembering those moments is uncovered. These visuals can even deepen one’s understanding of the memory or enhance one’s emotional connection to them.

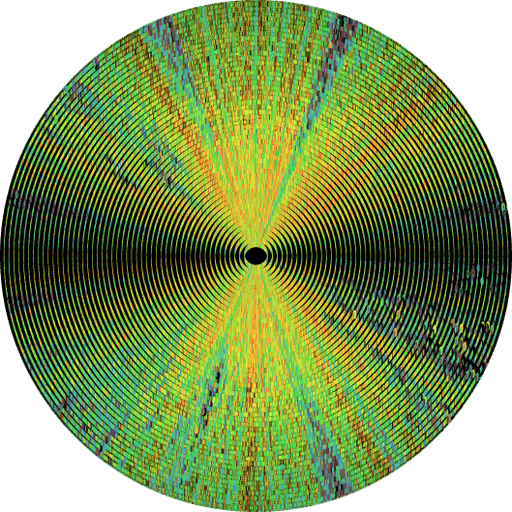

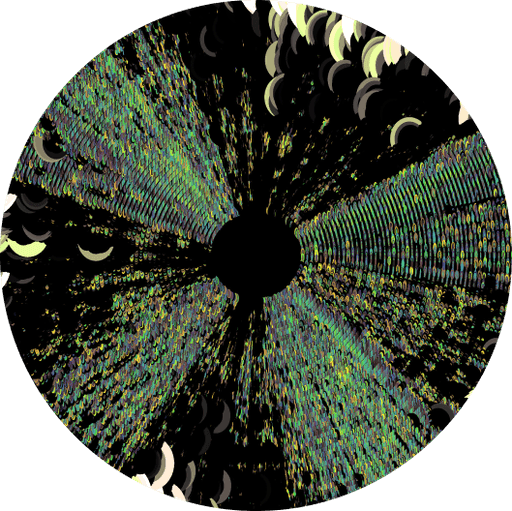

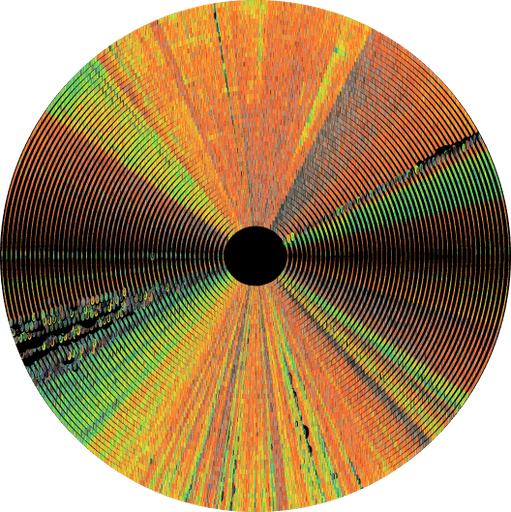

Each visual is generated in Processing, using the Minim library to extract data from the audio input. Audio is loaded into the code, and used to generate a visual output by taking the frequency spectrum and amplitude to draw the image. The image is comprised of only circles, and the size and color of each one is controlled by the audio input. This project sets out to create data driven visuals that encapsulate the emotional journey of the memories associated with audio.

The visuals produced draw resemblance to the iris of an eye, a CD, record label, genome sequence mapping, and even hard drive defragmentation. Despite all of these drastic differences from each other, they share commonalities— the archival of memory, or in this case information, and similar visual language. In Sights & Sounds of Memory Lane, audio is treated as a form of memory, and the generative visuals serve as an archive of the memory’s emotive journey.

Featured in the 01.24 Edition of PAGE Magazine.

English Translation:

Summer evenings from Zoyah Shah's childhood sound like "Imagine" by John Lennon, and "I Wanna Know" by RL Grime, reminiscent of dancing in the rain at a festival. Sounds and music are inextricably intertwined with our memory and often awaken very specific memories. But can these special moments also be visualized? The newly minted graduate spent nine months pursuing this question during her Bachelor of Fine Arts at Parsons School of Design in New York.

Shah used the first weeks of her project for research and conception. She discovered how different sensory impressions are interwoven with memories and experimented with ways to visualize sound - from 3D modeling to interactive book formats to AI generated images with RunwayML. However, she repeatedly encountered a problem: the results were difficult to compare and varied greatly. However, it was important to Shah to present the visualized sounds in an easily accessible way and to be able to share them with other people. That's why she ultimately decided on a creative coding approach with processing that produced reproducible results.

To do this, she developed a program using the Minim Library (https://code.compartmental.net/ minim) that generates diagrams from various sounds and songs. These consist of small ellipses whose size and color are determined by the amplitude. They form concentrically arranged rings that represent the entire frequency spectrum of the audio track. The length of the audio track also defines the size of the inner black ring. This creates circular visuals that reveal different patterns in the music with their shimmering colors and awaken associations.

Shah ultimately applied her program to her own memories set to music - from songs that are particularly important to her to the recorded sound of a whole day at the university. She then publicly archived the sounds, visualizations and associated stories on the self programmed website >>Sights and Sounds of Memory Lane (https://zoyah74.github.io/sights-and-sounds-of-memory-lane.github.io/). There, users can filter for different sounds, look at the wonderful graphics in different sizes side by side or read and listen to individual stories. If you want, you can even submit your own sounds, memories and names, which Shah will then visualize and incorporate into her program the archive records.

Topic

Music and sound are capable of evoking emotions that bring people back to specific memories. I often find myself connecting certain people, places, and experiences to specific songs, and wanted a way to visualize the emotional journey that each song has.

Question

How can we use design to visualize our memories/ auditory experiences?

Research + Precedents

Creating the Visuals

Sights & Sounds of Memory Lane explores the intersection of memory and audio, and I do this through generative design and creative coding.

I created the generative visuals in Processing, using the Minim Library.

The visuals I created are comprised of only circles that vary in size and color. Both the size and color of the circles are determined by the amplitude of the audio input. I assigned a range of colors for low and high amplitude in order to have variation throughout the visuals.

The visuals I produced draw resemblance to the iris of an eye, a CD, a record, genome sequence mapping, and even hard drive defragmentation. While all of these sound extremely different from each other, they share a few qualities. They all serve as a form of archive- an archive of memory- or specifically data and information, and they all achieve this with similar visual identity.

In my project, audio is treated as a form of memory, and the visuals serve as an archive of the memory.

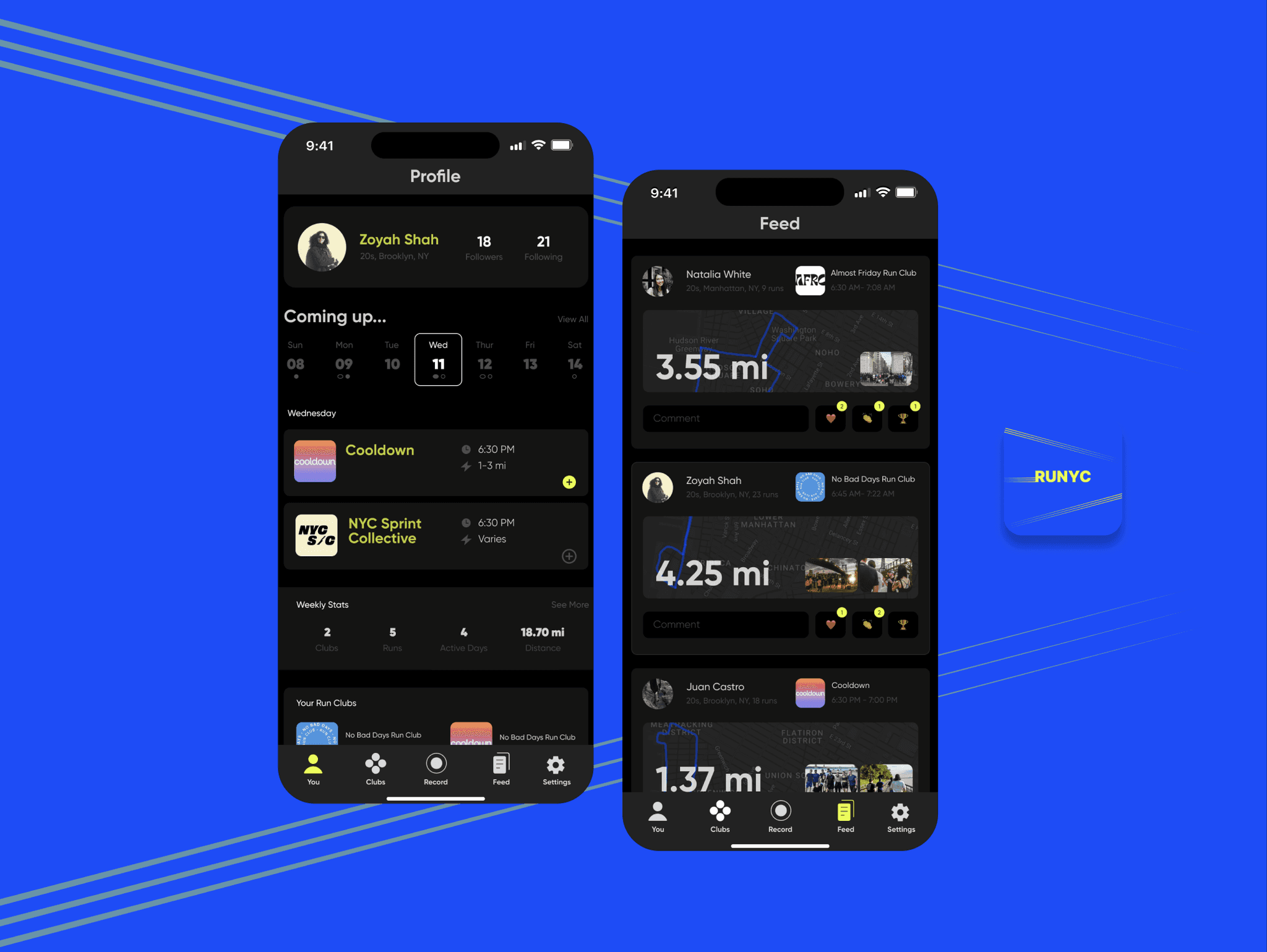

Putting Together the Website

The website serves as an archive of all the visuals I made.

Archive / Home Page

On this page you can see all the visuals and filter by the different categories. You can also view the visuals at any size using the slider.

Types of audio:

Types of audio:

Music

Songs.

Daily Audio

10 seconds of audio recorded every hour throughout my day.

Significant Moments

Moments like Steve Jobs announcing the first iPhone/ culturally significant audios that we all remember.

Music

Songs.

Daily Audio

10 seconds of audio recorded every hour throughout my day.

Significant Moments

Moments like Steve Jobs announcing the first iPhone/ culturally significant audios that we all remember.

Recorded Audio

Audio from videos in my camera roll.

Submissions

Any type of audio submitted to the website

This page is intentionally designed so that the archive has no information except for the visual. This way, users can just click which ever ones catch their eye.

Visuals

Users can click into any of these visuals to find out what they are. Here, you can listen to the audio and read the associated memory.

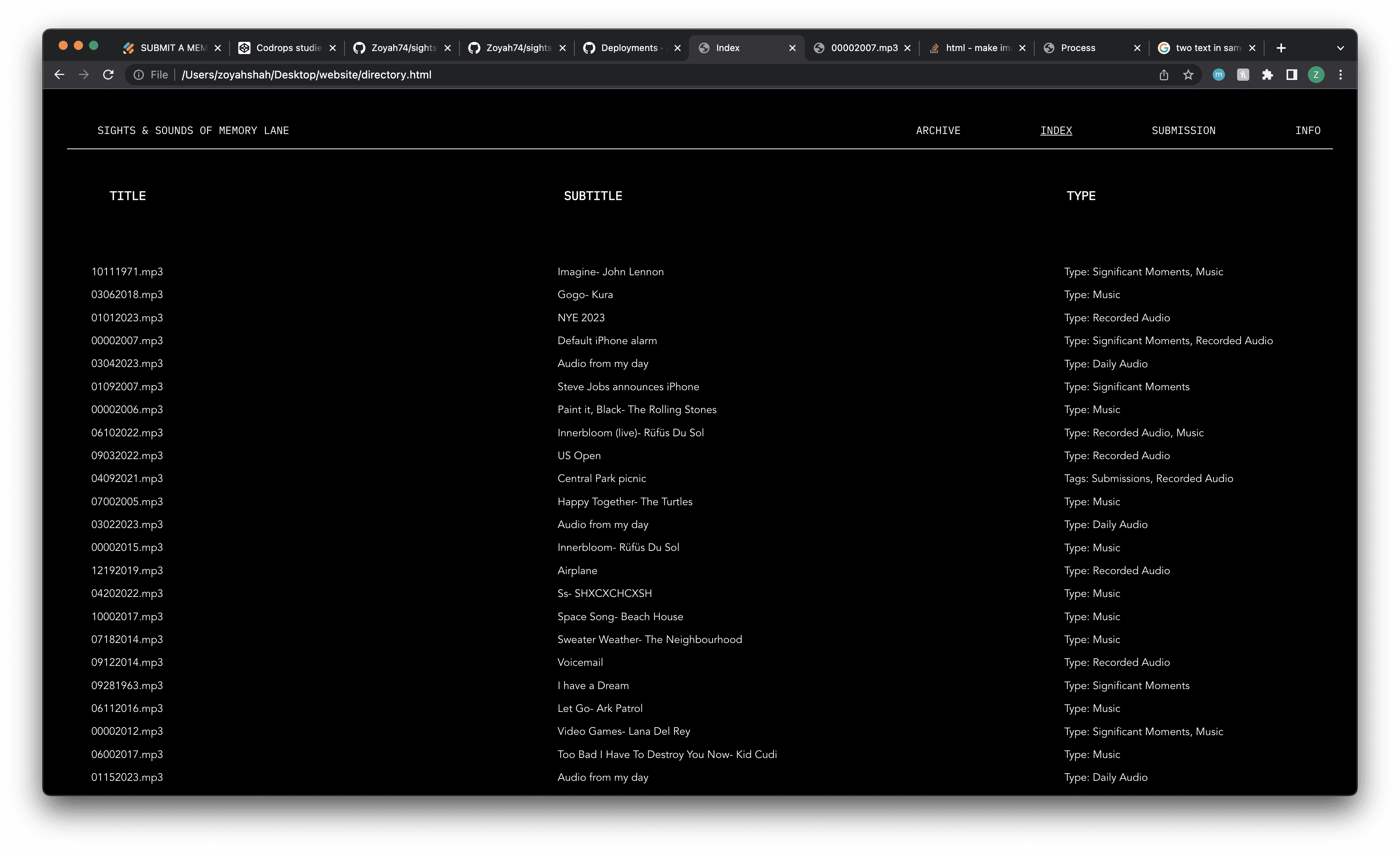

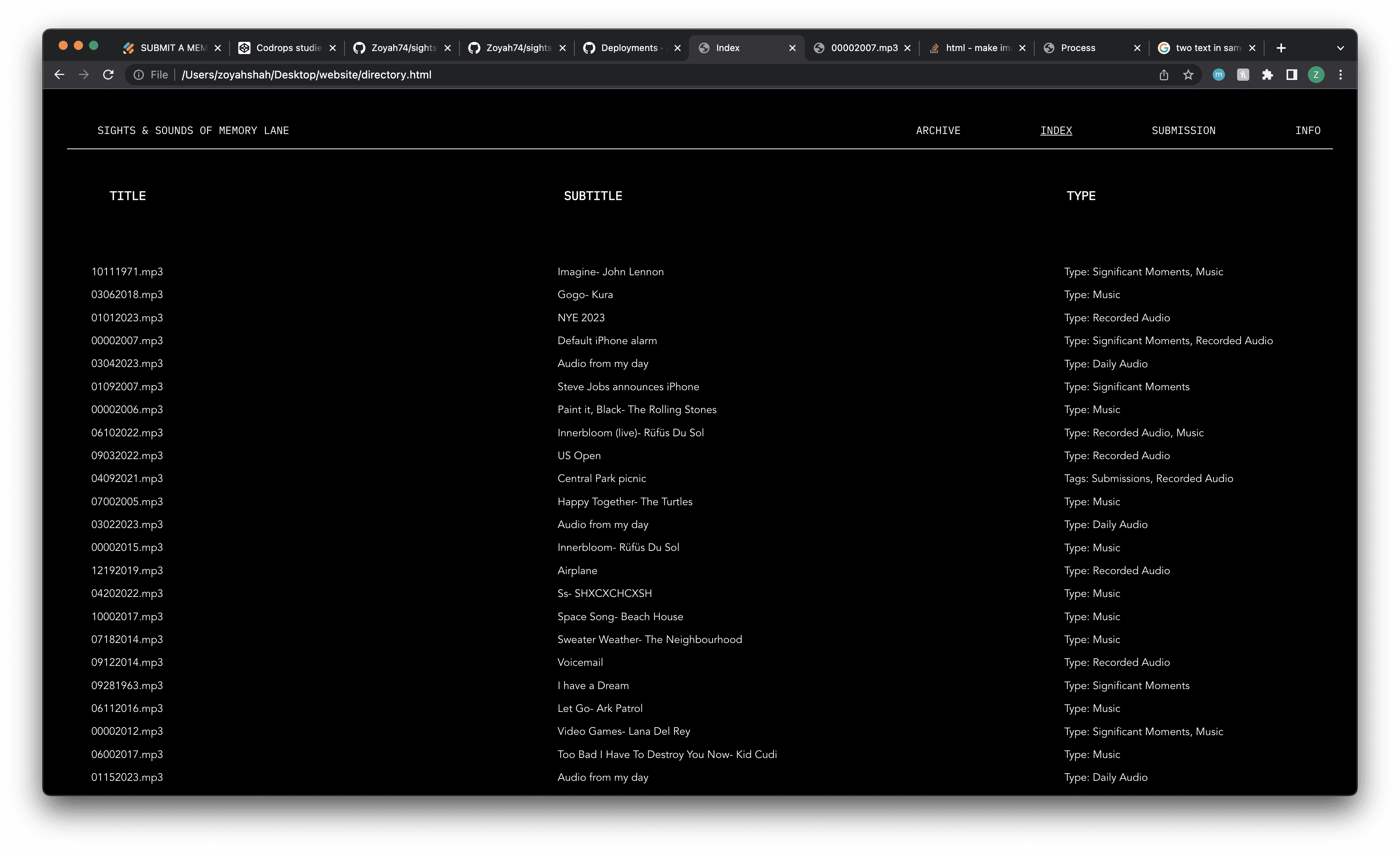

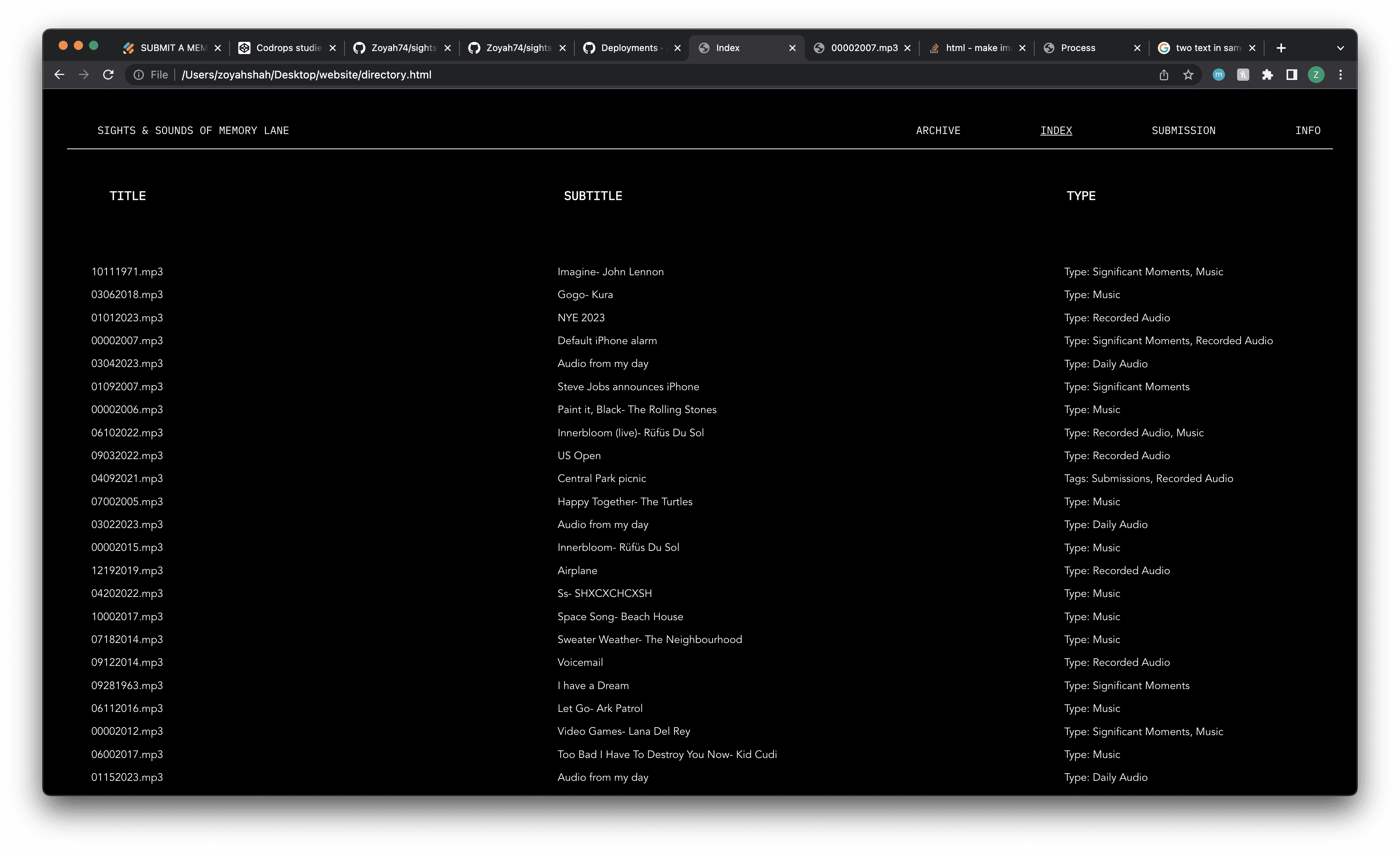

Index

The Index page is like the opposite of the archive page. Here you only see the name of the audio, subtitle, and the type of audio, but no visual. And you can click into any of these as well and it brings you to the same informational page for each visual.

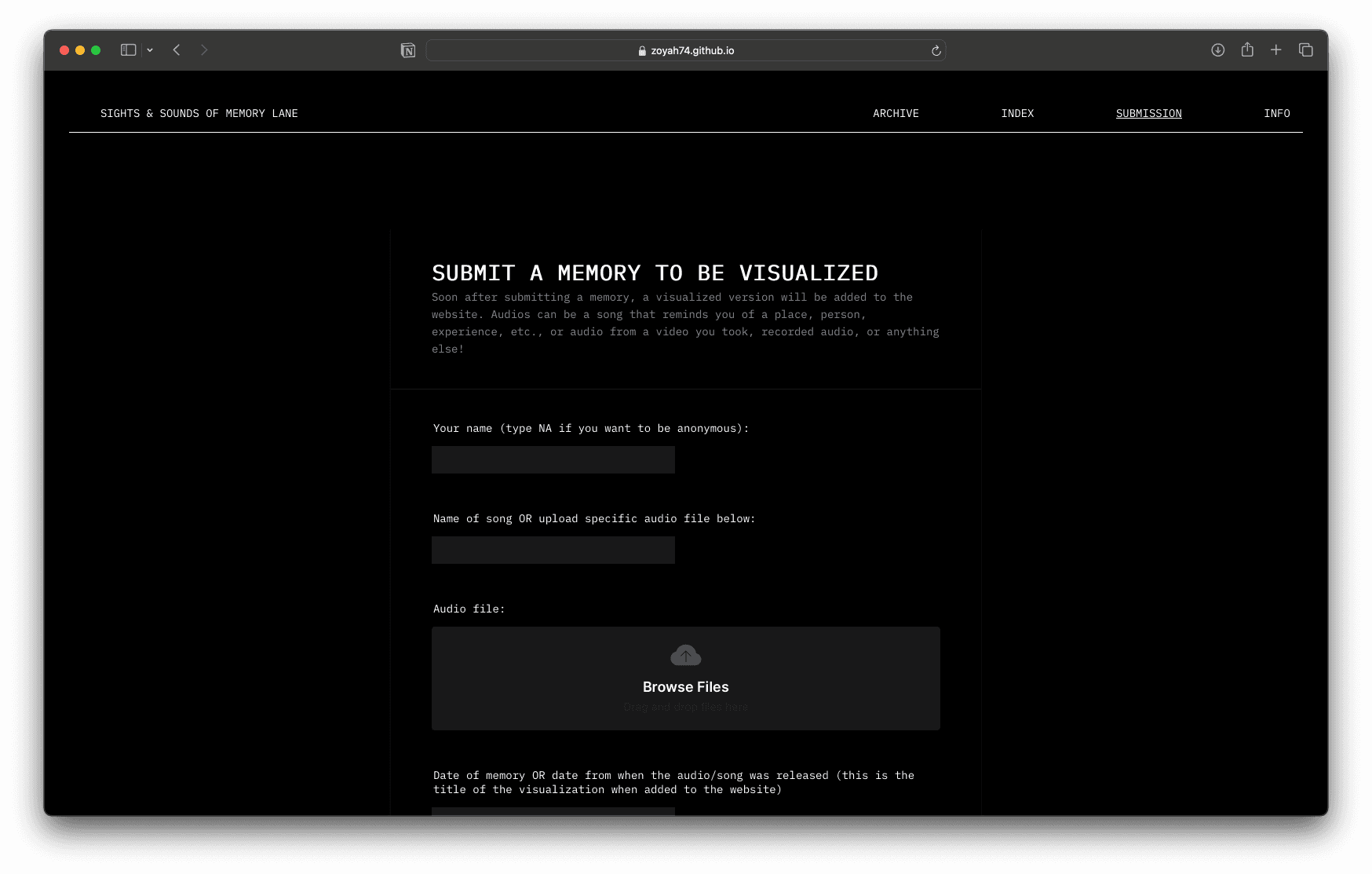

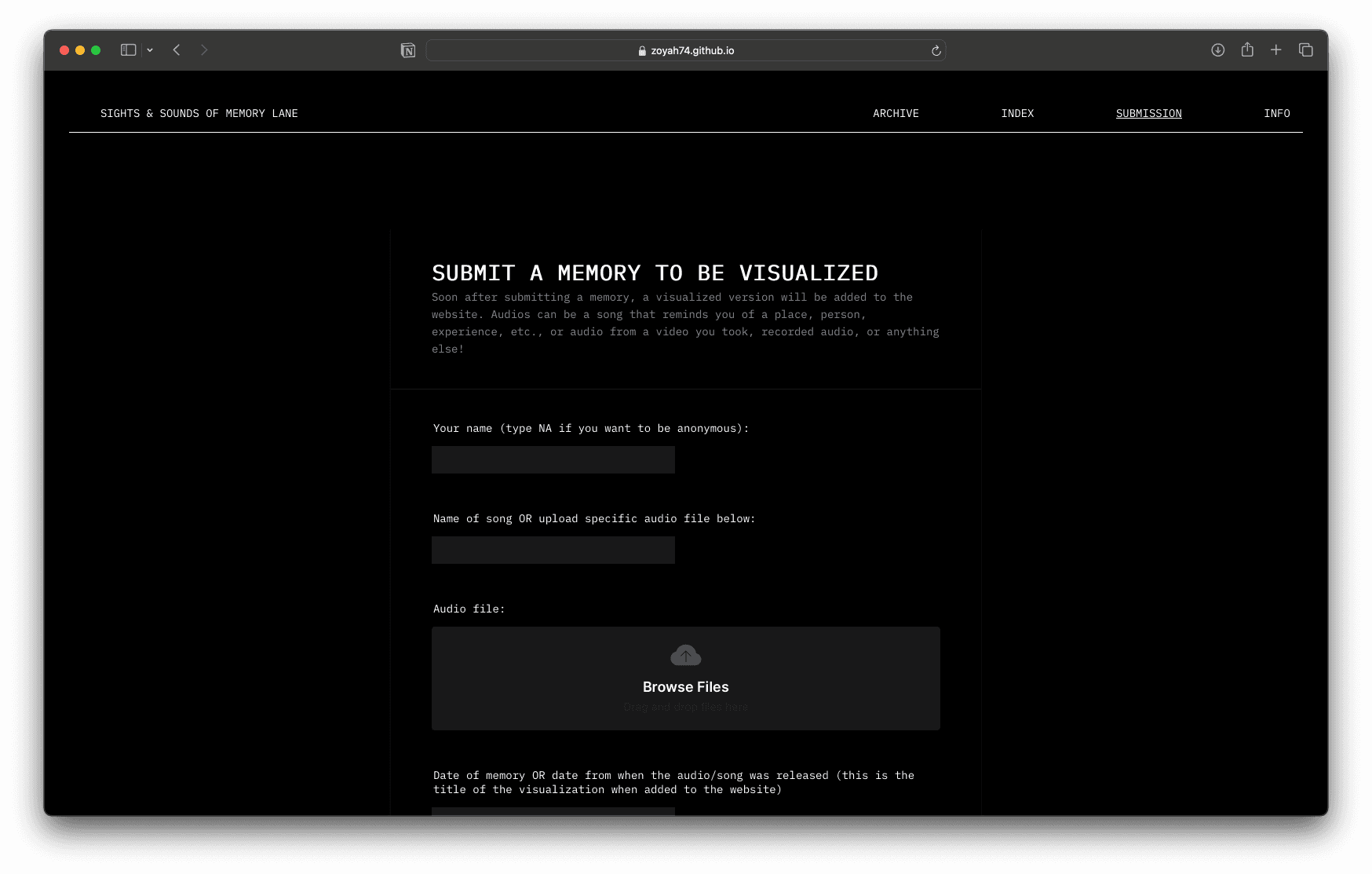

Submissions

On the submission page, viewers can submit an audio to the website. Once submitted, I receive the input, visualize it in my generator, and add it to the website.

Info

The Info page includes a synopsis of the project, and a video of one of the visuals being generated.

To put the website together, I coded it from scratch using HTML, CSS, and JavaScript. I used AirTable to store all of the information for each visual, and connected my AirTable to my Javascript file to templatize the page for each visual.

Sights & Sounds of Memory Lane

Parsons BFA Thesis

Timeframe

6 months

Tools

Processing + Minim Library, HTML, CSS, JavaScript

Description

“Sights and Sounds of Memory Lane” explores the intersection of memory and audio through creative coding and generative design.

By visualizing the auditory elements that are attached to memories, a new way of experiencing and remembering those moments is uncovered. These visuals can even deepen one’s understanding of the memory or enhance one’s emotional connection to them.

Each visual is generated in Processing, using the Minim library to extract data from the audio input. Audio is loaded into the code, and used to generate a visual output by taking the frequency spectrum and amplitude to draw the image. The image is comprised of only circles, and the size and color of each one is controlled by the audio input. This project sets out to create data driven visuals that encapsulate the emotional journey of the memories associated with audio.

The visuals produced draw resemblance to the iris of an eye, a CD, record label, genome sequence mapping, and even hard drive defragmentation. Despite all of these drastic differences from each other, they share commonalities— the archival of memory, or in this case information, and similar visual language. In Sights & Sounds of Memory Lane, audio is treated as a form of memory, and the generative visuals serve as an archive of the memory’s emotive journey.

Featured in the 01.24 Edition of PAGE Magazine.

English Translation:

Summer evenings from Zoyah Shah's childhood sound like "Imagine" by John Lennon, and "I Wanna Know" by RL Grime, reminiscent of dancing in the rain at a festival. Sounds and music are inextricably intertwined with our memory and often awaken very specific memories. But can these special moments also be visualized? The newly minted graduate spent nine months pursuing this question during her Bachelor of Fine Arts at Parsons School of Design in New York.

Shah used the first weeks of her project for research and conception. She discovered how different sensory impressions are interwoven with memories and experimented with ways to visualize sound - from 3D modeling to interactive book formats to AI generated images with RunwayML. However, she repeatedly encountered a problem: the results were difficult to compare and varied greatly. However, it was important to Shah to present the visualized sounds in an easily accessible way and to be able to share them with other people. That's why she ultimately decided on a creative coding approach with processing that produced reproducible results.

To do this, she developed a program using the Minim Library (https://code.compartmental.net/ minim) that generates diagrams from various sounds and songs. These consist of small ellipses whose size and color are determined by the amplitude. They form concentrically arranged rings that represent the entire frequency spectrum of the audio track. The length of the audio track also defines the size of the inner black ring. This creates circular visuals that reveal different patterns in the music with their shimmering colors and awaken associations.

Shah ultimately applied her program to her own memories set to music - from songs that are particularly important to her to the recorded sound of a whole day at the university. She then publicly archived the sounds, visualizations and associated stories on the self programmed website >>Sights and Sounds of Memory Lane (https://zoyah74.github.io/sights-and-sounds-of-memory-lane.github.io/). There, users can filter for different sounds, look at the wonderful graphics in different sizes side by side or read and listen to individual stories. If you want, you can even submit your own sounds, memories and names, which Shah will then visualize and incorporate into her program the archive records.

Topic

Music and sound are capable of evoking emotions that bring people back to specific memories. I often find myself connecting certain people, places, and experiences to specific songs, and wanted a way to visualize the emotional journey that each song has.

Question

How can we use design to visualize our memories/ auditory experiences?

Research + Precedents

Creating the Visuals

Sights & Sounds of Memory Lane explores the intersection of memory and audio, and I do this through generative design and creative coding.

I created the generative visuals in Processing, using the Minim Library.

The visuals I created are comprised of only circles that vary in size and color. Both the size and color of the circles are determined by the amplitude of the audio input. I assigned a range of colors for low and high amplitude in order to have variation throughout the visuals.

The visuals I produced draw resemblance to the iris of an eye, a CD, a record, genome sequence mapping, and even hard drive defragmentation. While all of these sound extremely different from each other, they share a few qualities. They all serve as a form of archive- an archive of memory- or specifically data and information, and they all achieve this with similar visual identity.

In my project, audio is treated as a form of memory, and the visuals serve as an archive of the memory.

Putting Together the Website

The website serves as an archive of all the visuals I made.

Archive / Home Page

On this page you can see all the visuals and filter by the different categories. You can also view the visuals at any size using the slider.

Types of audio:

Music

Songs.

Daily Audio

10 seconds of audio recorded every hour throughout my day.

Significant Moments

Moments like Steve Jobs announcing the first iPhone/ culturally significant audios that we all remember.

Music

Songs.

Daily Audio

10 seconds of audio recorded every hour throughout my day.

Significant Moments

Moments like Steve Jobs announcing the first iPhone/ culturally significant audios that we all remember.

Recorded Audio

Audio from videos in my camera roll.

Submissions

Any type of audio submitted to the website

This page is intentionally designed so that the archive has no information except for the visual. This way, users can just click which ever ones catch their eye.

Visuals

Users can click into any of these visuals to find out what they are. Here, you can listen to the audio and read the associated memory.

Index

The Index page is like the opposite of the archive page. Here you only see the name of the audio, subtitle, and the type of audio, but no visual. And you can click into any of these as well and it brings you to the same informational page for each visual.

Submissions

On the submission page, viewers can submit an audio to the website. Once submitted, I receive the input, visualize it in my generator, and add it to the website.

Info

The Info page includes a synopsis of the project, and a video of one of the visuals being generated.

To put the website together, I coded it from scratch using HTML, CSS, and JavaScript. I used AirTable to store all of the information for each visual, and connected my AirTable to my Javascript file to templatize the page for each visual.